Getting into the minds of our users

The intersection of UX and Neuroscience.

Taylor Green

Jul 27, 2020

9

min read

As UX professionals, we constantly observe human behavior — from examining users interacting with products to gathering data to inform our designs. We assess the goals, needs, and reactions of our core users, yet, rarely do we dive deeper than that.

How often are we thinking about the underlying cognitive functions and biological processes that provoke customer behavior?

We don’t necessarily need this information to do our job, similar to how users don’t need to know how the back-end of our products work to use them.

However, imagine how much more effective UX designers could be if we understood the full end-to-end process of human behavior. If we were aware of how the brain produces a result, we could optimize our research experiments and better articulate our creative direction to stakeholders.

Let’s use a quick analogy — our brain can be thought of as the ‘back-end’ or underlying processes that allow the user to perform a task. Meanwhile, the ‘front-end’ can be thought of as the output or overt behavior of the user.

Time to learn some fundamental terms…

Note: Given the vast complexity of the brain, what I cover here barely scratches the surface. For the sake of this article, I tried to keep it high-level, however, I do mention some technical terms. I recommend focusing less on the names and more on gaining an understanding of the relevant concepts of human behavior. (Links to learn more are provided if you want to dive deeper)

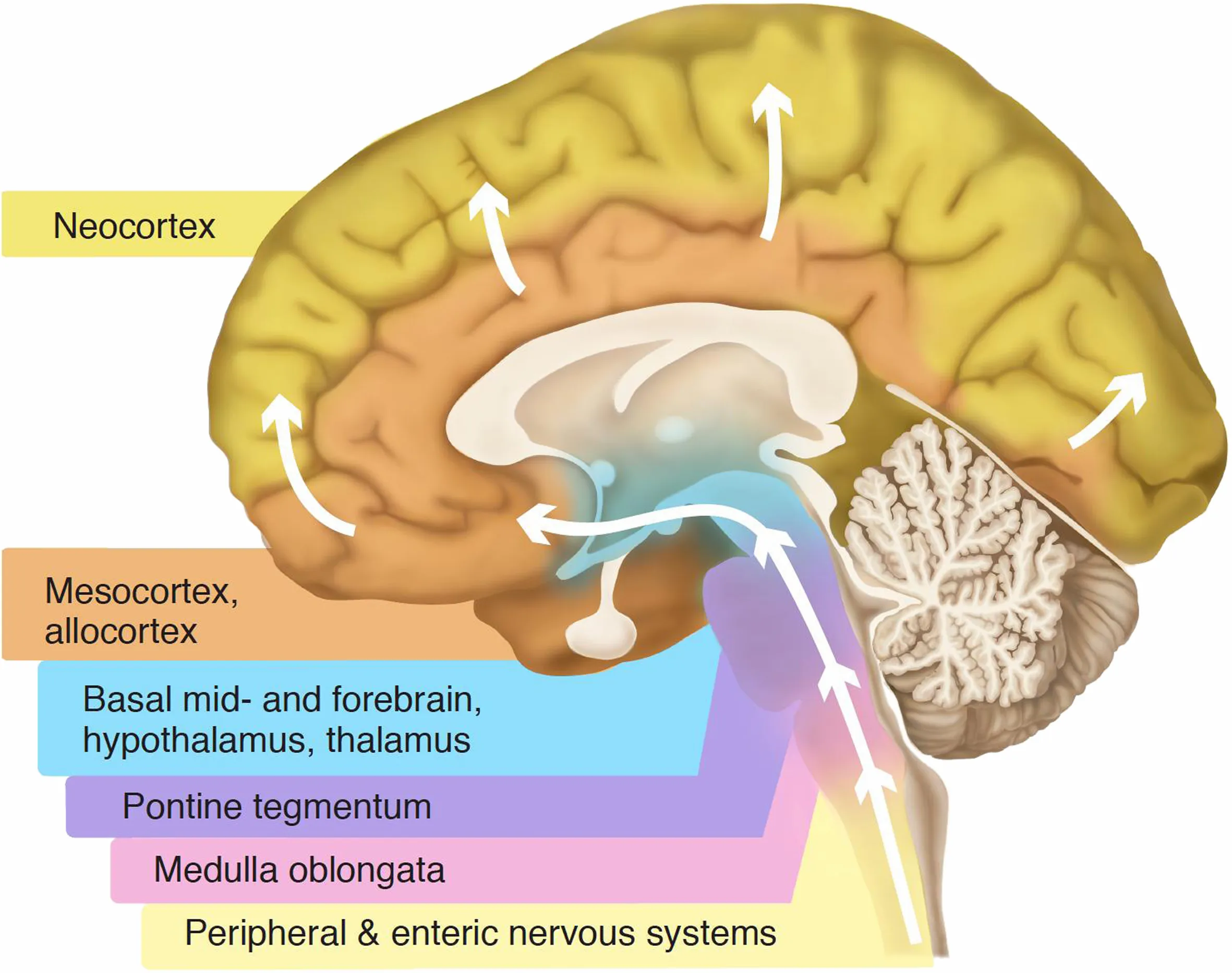

What are the major parts of the brain?

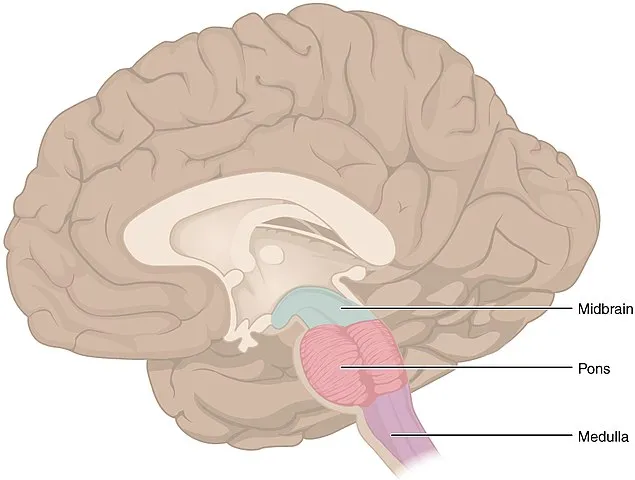

1) The brainstem

The brainstem is ~300 million years old and is the stalk that connects the brain and the spinal cord. It is essential for both survival and cognitive function, and helps to regulate our breathing and other core body activities.

It is composed of the midbrain, the pons, and the medulla oblongata.

The midbrain contains clusters of neurons that have various functions (e.g. superior and inferior colliculi).

The superior colliculi are thought to be involved in directing behavioral responses toward stimuli in the environment.

The pons can be thought of as a bridge containing various tracts and nuclei, each with a different purpose. It is the bridge connecting the medulla and the midbrain. It contains one of the major pathways for information to travel from the brain and brainstem to the cerebellum.

The pons comprises of nuclei that deal with facial sensations, motor control of the eyes, face and mouth, hearing, equilibrium, and various autonomic functions.

Last but not least, the medulla oblongata is the part of the brain stem that connects to the spinal cord. The medulla is essential for survival and contains nuclei that control cardiovascular and respiratory functions, as well as reflexive actions (e.g. coughing, sneezing).

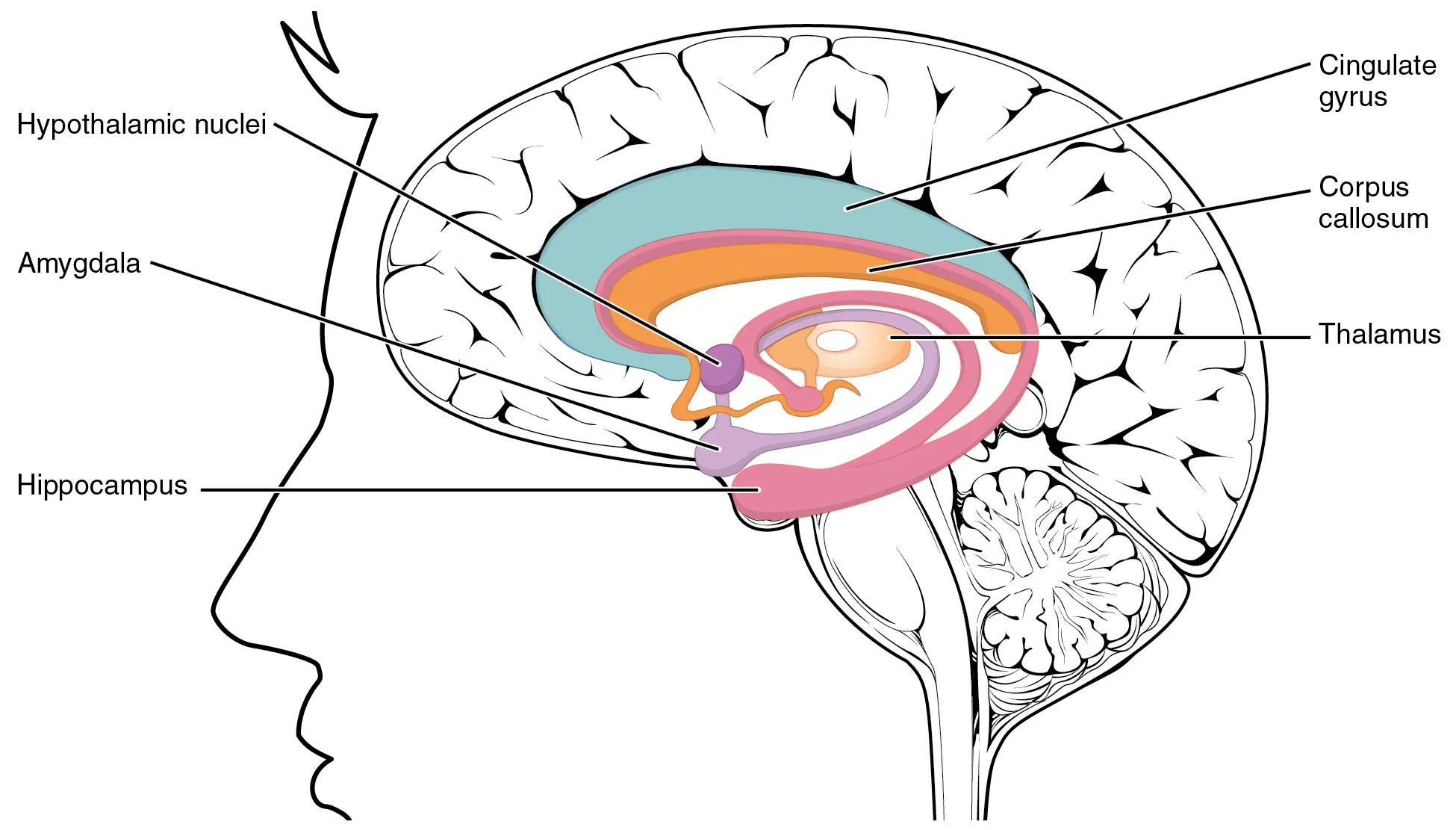

2) The limbic system (emotional brain)

The limbic system is most frequently thought of as the emotional center of our brain. It contains various structures dealing with emotion and memory. The most notable structures are the amygdala, the hippocampus, and the hypothalamus.

It [limbic system] regulates autonomic or endocrine function in response to emotional stimuli and also is involved in reinforcing behavior.

The amygdala is an almond-shaped collection of nuclei that are involved with fearful and anxious emotions. The hippocampus is interconnected with the amygdala and is mainly associated with memory. The hypothalamus controls hormone release, maintains daily physiological cycles, and regulates emotional responses.

3) Neocortex (logical brain)

The neocortex is the “newer” part of our brain (~200,000 years old) and is in charge of interpreting conscious thought and performing logical reasoning. Within the neocortex is the prefrontal cortex (human brain), which differentiates humans from other species because it provides us with reason and intellect.

This is the part of the brain that understands text, logic, rationalization, and complex emotion. Other executive functions of the prefrontal cortex include planning, judgment, attention, decision-making.

How does this apply to human-centered design?

As UX professionals, we have the unique opportunity to leverage this knowledge of memory, human emotions, and patterns to build optimal designs that help users achieve their goals. It’s in our best interest to build products that activate reward systems and minimize the load on memory (i.e. hippocampus).

Activating specific regions of the brain will stimulate certain neurotransmitters. For example, if our designs can provoke an increase in dopamine (aka our happiness hormone), this leads to happier customers.

Additionally, this knowledge of cognitive activity will allow us to predict and form more grounded hypotheses in our research.

How do we employ this in our research and designs?

1) Better research/testing methodologies

First, we can enlist this knowledge to create more reliable testing mechanisms. Normally, we rely on users to verbally express their thoughts and reactions, which is a good way to get some initial feedback.

However, are we relying too heavily on users’ verbal cues?

Participants (and researchers) are not 100 percent objective all the time. For example, often users will tell us what they think we want to hear. Not only that, but it can be difficult to accurately convey our emotions verbally, and therefore, our results end up being subjective to some extent.

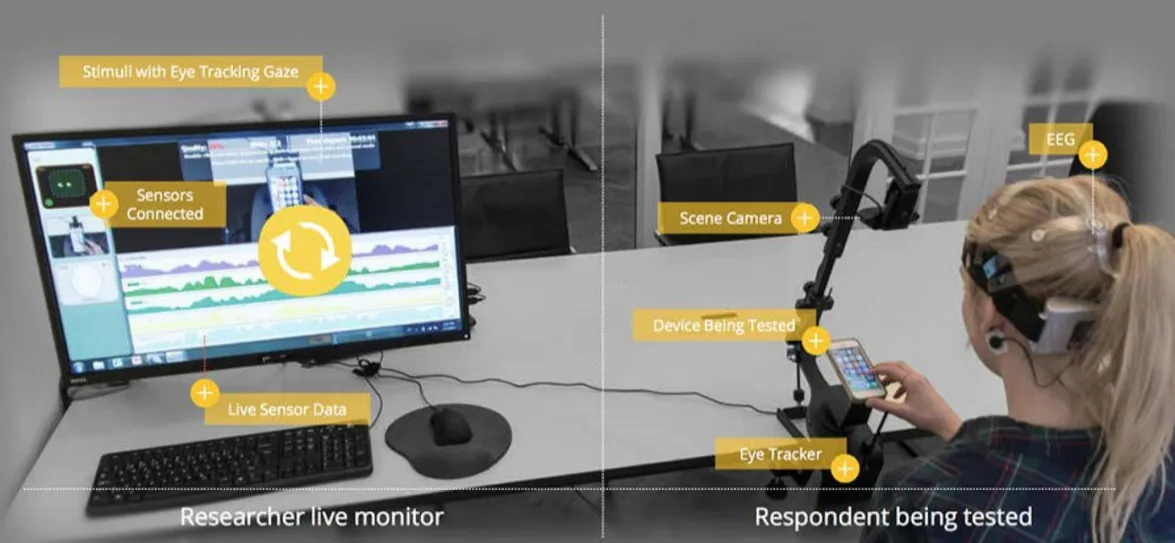

Imagine a usability or user testing session where we could mitigate subjectivity or biases — one in which we could examine nonverbal cues. Through various tracking tools, we could uncover human emotion and detect frustration or satisfaction.

For example, electroencephalography (EEG) is an effective method for measuring electrical activity in the brain via electrodes placed on the scalp. The signals obtained from these electrodes are represented by waveforms reflecting voltage variation over time.

EEG is one of the most sensitive instruments and offers many beneficial features, such as tracking real-time, millisecond-level precision, and offering a full spectrum of emotional/cognitive activity. Also, some software can display on-the-fly representation of emotions.

There are newer headsets for EEGs, such as “Emotiv headset,” which is wireless and connects through Bluetooth. This means it can be worn comfortably by the participant and produces minimal interference during a usability session.

Analyzing and translating these waveforms into emotional responses is complex, but there are various analysis tools to help interpret raw EEG data.

These tools can produce visualizations that correspond to a standardized set of emotional states, such as engagement, interest, frustration, happiness, or sadness.

Other helpful tools include Galvanic Skin Response (GSR), which measures physiological arousal. GSR can provide information about a plethora of emotional states, such as: arousal, engagement, stress, boredom, disinterest, and relaxation.

This measurement is ideal for detecting situations where a user is having difficulty using an interface and becoming increasingly frustrated and stressed.

There are several other tests, such as ones for detecting facial expressions, tracking eye-movements, or measuring heart rate.

These physiological signs can reflect a user’s frustration or satisfaction with the product, which will help us to see in real-time areas that need improvement. The future of UX research incorporates better methods for measuring emotional response, but the best approach remains a combination of verbal and nonverbal metrics.

Physiological testing is not 100 percent accurate either and therefore should be used in tandem with qualitative data. It’s critical to not just understand what emotion is elicited, but also why the user feels this way. It’s still important to ask users direct questions when we detect a certain emotion using nonverbal tests.

2) More intuitive and supportive experiences

Knowing that information overload is an issue in today’s society, our products should aim to reduce the cognitive load placed upon users. The interfaces we build can guide users in their decision-making process and minimize the need to memorize information.

Another neuro concept is mirror neurons, which can help us understand the patterns and skills learned through imitation.

Mirror neurons work to create a perception of experience. Once these have formed, the brain automatically expects the same outcome from the next similar experience.

An example of this is our expectations of switching from a web app to a corresponding mobile app. If we switch between the two, we expect the experience to be relatively the same. This is where mirror neurons come into play and plan to repeat the same outcome. Based on previous experiences, mirror neurons determine if an experience is safe and whether threat response can be pushed aside.

UX designers have the opportunity here to make sure experiences are consistent. This doesn’t just apply across breakpoints, but the experience should be consistent laterally as well. For example, if a user flips through pages in the nav, they should see a similar theme, pattern, and verbiage. If the pages look and feel different for each journey, then we are asking users to learn a new experience each time. This can lead to frustration and a negative association with the brand.

In addition, Neuroplasticity is the brain’s ability to reorganize itself by forming new neural connections throughout life. As we repeat habits and behaviors, the neural paths are reinforced. This is how we are constantly learning new skills and behaviors. Based on this, we can create interfaces that match this phenomenon, by adapting interactions to users’ behavior.

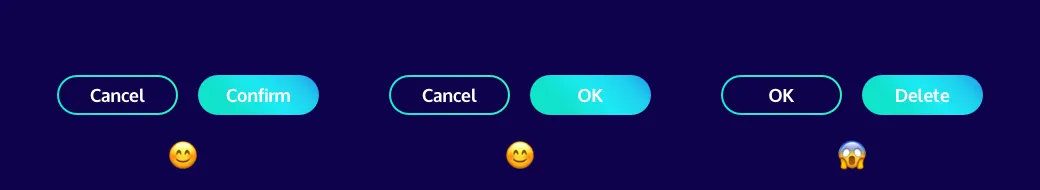

A basic example is keeping button shapes and colors consistent. As customers use our products more, they will begin to learn the patterns and familiarize themselves with how items are stylized. Therefore, primary CTAs should be one color, shape, and font style across the interface. Actionable elements should have the same functionality throughout. Similar to the example below, imagine how confusing it would be if the ‘confirm’ and ‘delete’ CTAs were stylized the same throughout the site.

3) Stronger connection with users

Let’s use this information to our customers' advantage — knowing how to tap into users’ emotions should only be utilized to create a more positive experience.

We can create a sensation of reward and positivity through activating the dopamine pathway. If customers have a positive feeling when using our products, then they will form a stronger connection and attachment to the brand. Through trust and engagement, we can trigger a connectedness with the product.

For example, Seamless does a nice job of this by saving your recent orders, so that each time you open the app you can view your previous orders. This may seem like a trivial feature, but it’s small acts like this that can allow users to feel a sense of ownership and control over their choices.

Based on our reward system pathways, users want to feel certainty, fairness, autonomy, and connectedness. Another example of this is Amazon offering reviews of products. This allows us to feel a sense of connection with other people, fulfilling our brain’s desire to fit in. Bad or good reviews, we still feel this sense of relatedness and fairness when using the website.

Conclusion

Now, more than ever, UX professionals must step up and ensure that products are being created with fairness and positivity in mind. We’re in a unique position to provide users a feeling of positivity and trust when using our products. Let’s use these neuroscience principles to create experiences that are more intuitive, supportive, and productive.

You might also like…

How to reach me

© 2024 Taylor Green. All Rights Reserved.

Follow

taylorgreen.work@gmail.com